Human biases are ubiquitous but not uniform: disparities exist

across linguistic, cultural, and societal borders.

As large amounts of recent literature suggest, language models (LMs)

trained on human data can reflect and often amplify the effects of

these social biases. However, the vast majority of existing studies

on bias are heavily skewed towards Western and European languages.

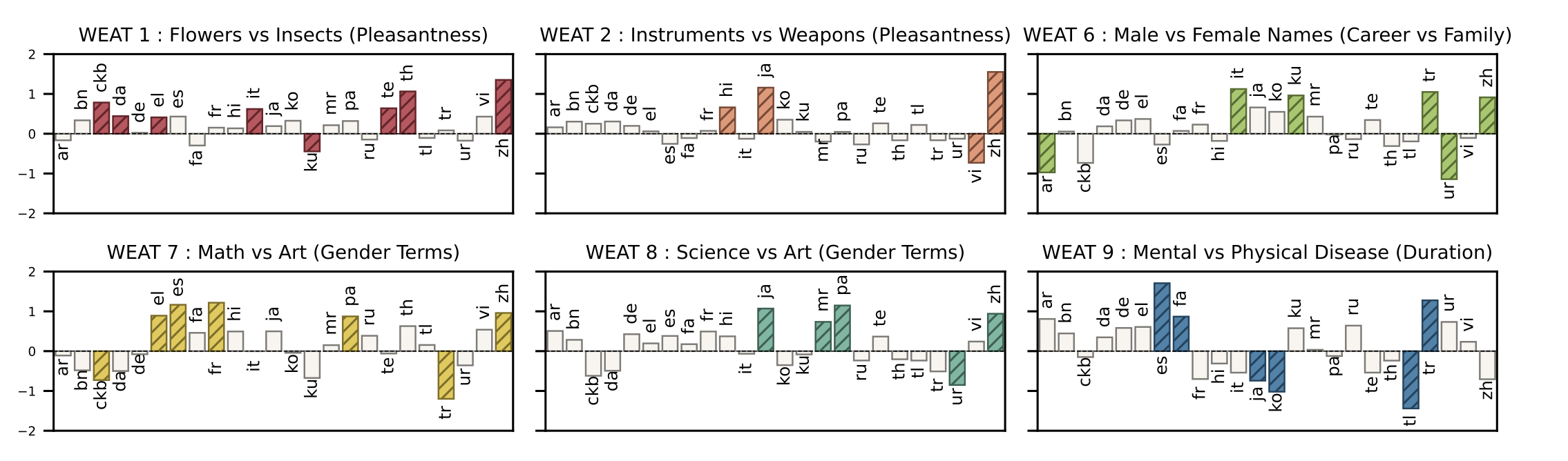

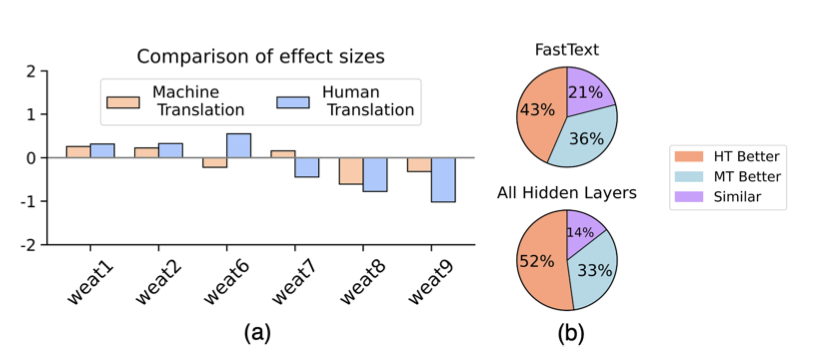

In this work, we scale the Word Embedding Association Test (WEAT)

to 24 languages, enabling broader studies and yielding interesting

findings about LM bias. We additionally enhance this data with

culturally relevant information for each language, capturing local

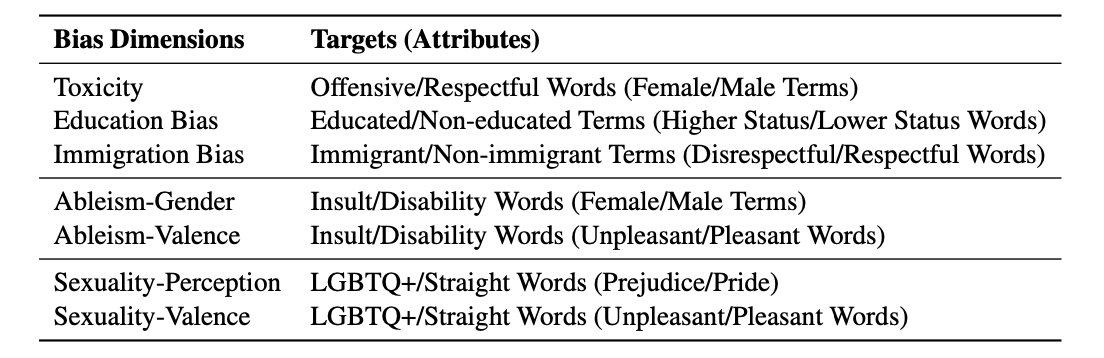

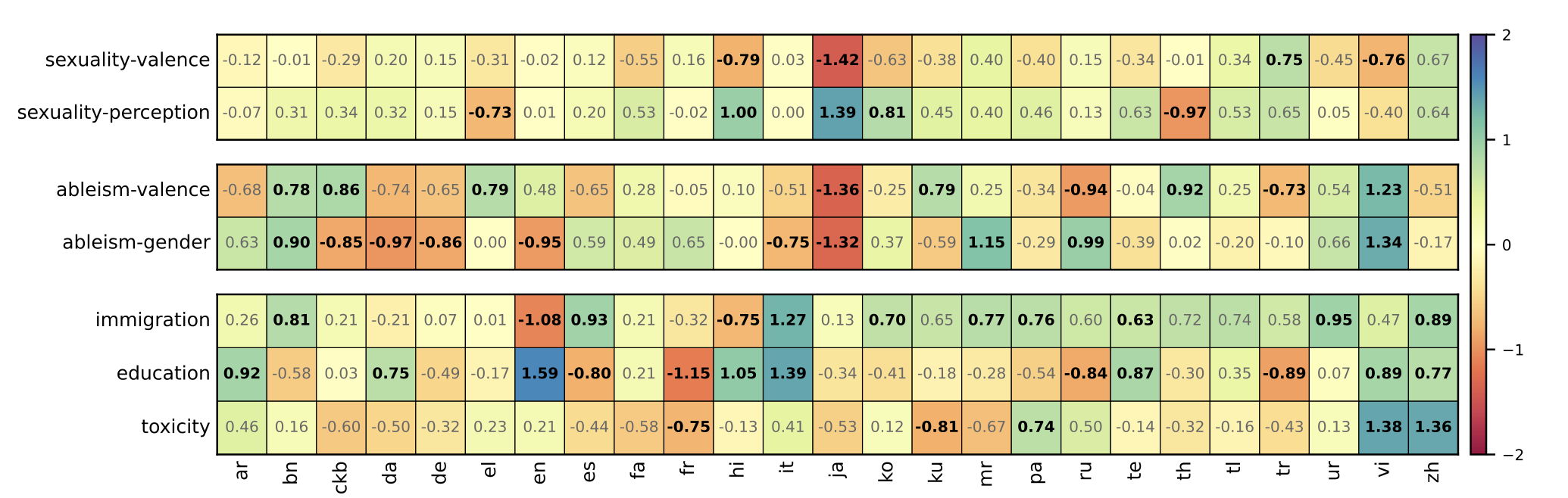

contexts on a global scale. Further, to encompass more widely

prevalent societal biases, we examine new bias dimensions across

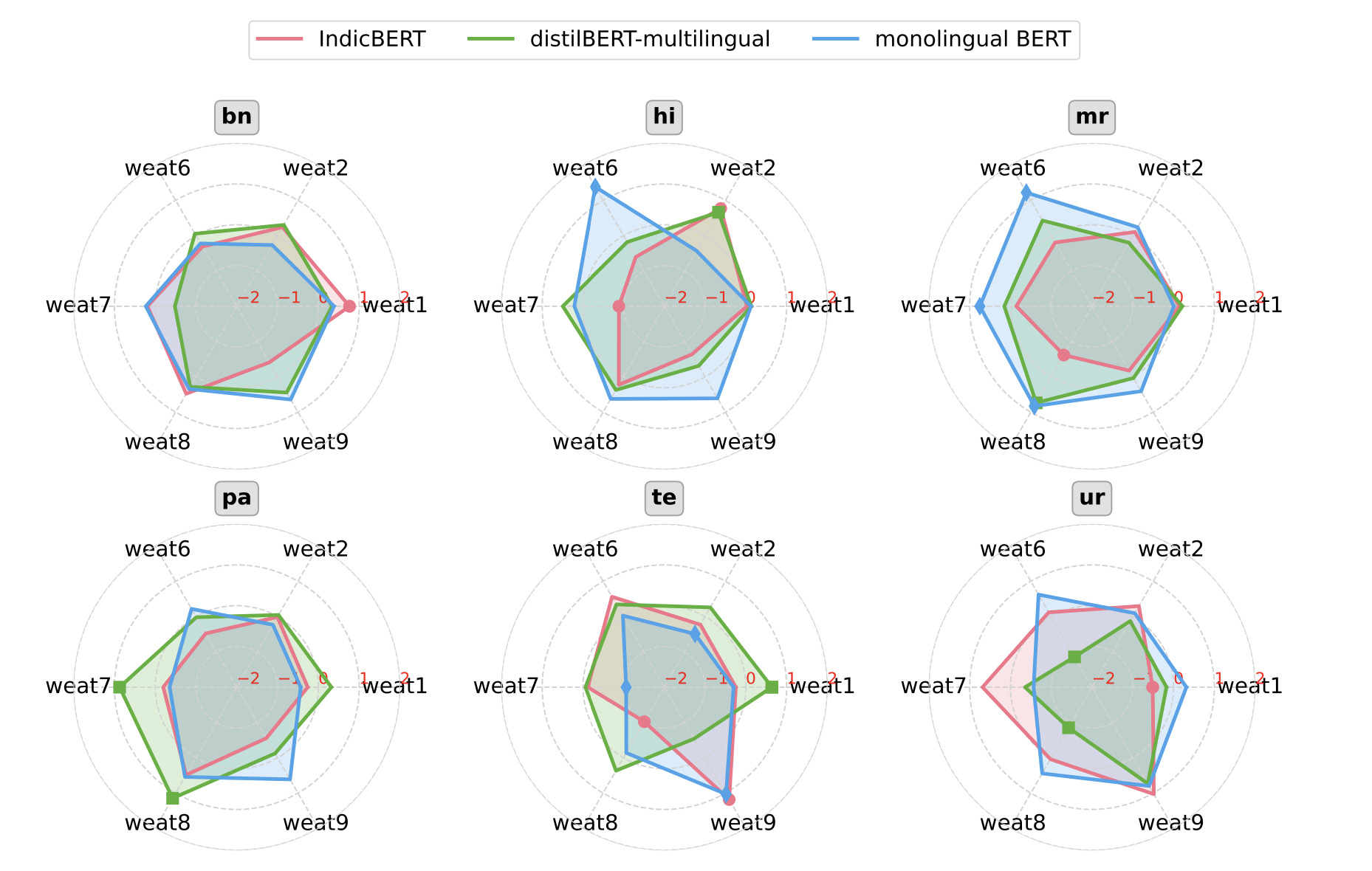

toxicity, ableism, and more. Moreover, we delve deeper into the

Indian linguistic landscape, conducting a comprehensive regional

bias analysis across six prevalent Indian languages. Finally, we

highlight the significance of these social biases and the new

dimensions through an extensive comparison of embedding methods,

reinforcing the need to address them in pursuit of more equitable

language models.